Hello! I am a Researcher in Advanced Agent Lab at LG AI Research (Ann Arbor, Michigan), working with Prof. Honglak Lee. I received my PhD in Computer Science at Seoul National University (advisors: Prof. Gunhee Kim and Prof. Hyun Oh Song).

My research interests mainly lie in building capable AI agents for decision-making in challenging, real-world tasks, with language and multimodal models and reinforcement learning.

Selected Professional Experiences

- LG AI Research (Ann Arbor, Michigan) (Aug. 2023 - Present)

- Researcher in Advanced Agent Lab

- Manager: Prof. Honglak Lee

Publications (*: equal contribution)

Language and Multimodal Models and Agents

| Towards Minimal Fine-Tuning of VLMs Tiange Luo, Lajanugen Logeswaran, Jaekyeom Kim, Justin Johnson, Honglak Lee Preprint [arxiv] | |

| Beyond Blind Following: Evaluating Robustness of LLM Agents under Imperfect Guidance Yao Fu, Ran Qiu, Xinhe Wang, Jacob Sansom, Sathvika Ayyappa Prabhu, Huijie Tang, Jaekyeom Kim, Sungryull Sohn, Honglak Lee EACL 2026 | |

| MLRC-Bench: Can Language Agents Solve Machine Learning Research Challenges? Yunxiang Zhang, Muhammad Khalifa, Shitanshu Bhushan, Grant D Murphy, Lajanugen Logeswaran, Jaekyeom Kim, Moontae Lee, Honglak Lee, Lu Wang NeurIPS 2025 Datasets and Benchmarks Track (accepted) [arxiv] | |

| Scaling Web Agent Training through Automatic Data Generation and Fine-grained Evaluation Lajanugen Logeswaran, Jaekyeom Kim, Sungryull Sohn, Creighton Glasscock, Honglak Lee COLM 2025 [paper] | |

| Process Reward Models That Think Muhammad Khalifa, Rishabh Agarwal, Lajanugen Logeswaran, Jaekyeom Kim, Hao Peng, Moontae Lee, Honglak Lee, Lu Wang Workshop on Test-time Scaling and Reasoning Models at COLM 2025 [arxiv] | |

| Our large-scale analysis with our AI agent suggests that ~80% of the popular datasets with commercially permissive licenses are, in fact, not likely commercially viable due to how those datasets were constructed. | Do Not Trust Licenses You See: Dataset Compliance Requires Massive-Scale AI-Powered Lifecycle Tracing Jaekyeom Kim*, Sungryull Sohn*, Gerrard Jeongwon Jo, Jihoon Choi, Kyunghoon Bae, Hwayoung Lee, Yongmin Park, Honglak Lee Preprint [arxiv] [post] [project] |

| Interactive and Expressive Code-Augmented Planning with Large Language Models Anthony Z. Liu, Xinhe Wang, Jacob Sansom, Yao Fu, Jongwook Choi, Sungryull Sohn, Jaekyeom Kim, Honglak Lee ACL 2025 [paper] [arxiv] | |

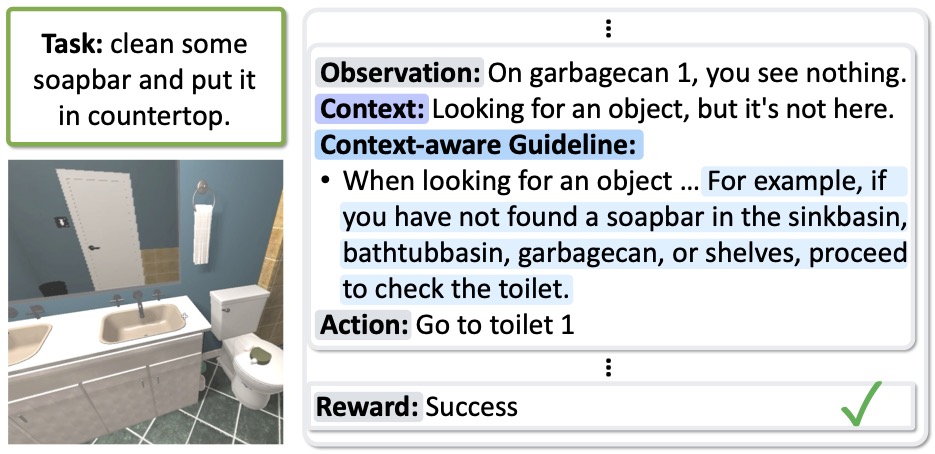

| AutoGuide: Automated Generation and Selection of Context-Aware Guidelines for Large Language Model Agents Yao Fu*, Dong-Ki Kim*, Jaekyeom Kim, Sungryull Sohn, Lajanugen Logeswaran, Kyunghoon Bae, Honglak Lee NeurIPS 2024 [paper] [arxiv] |

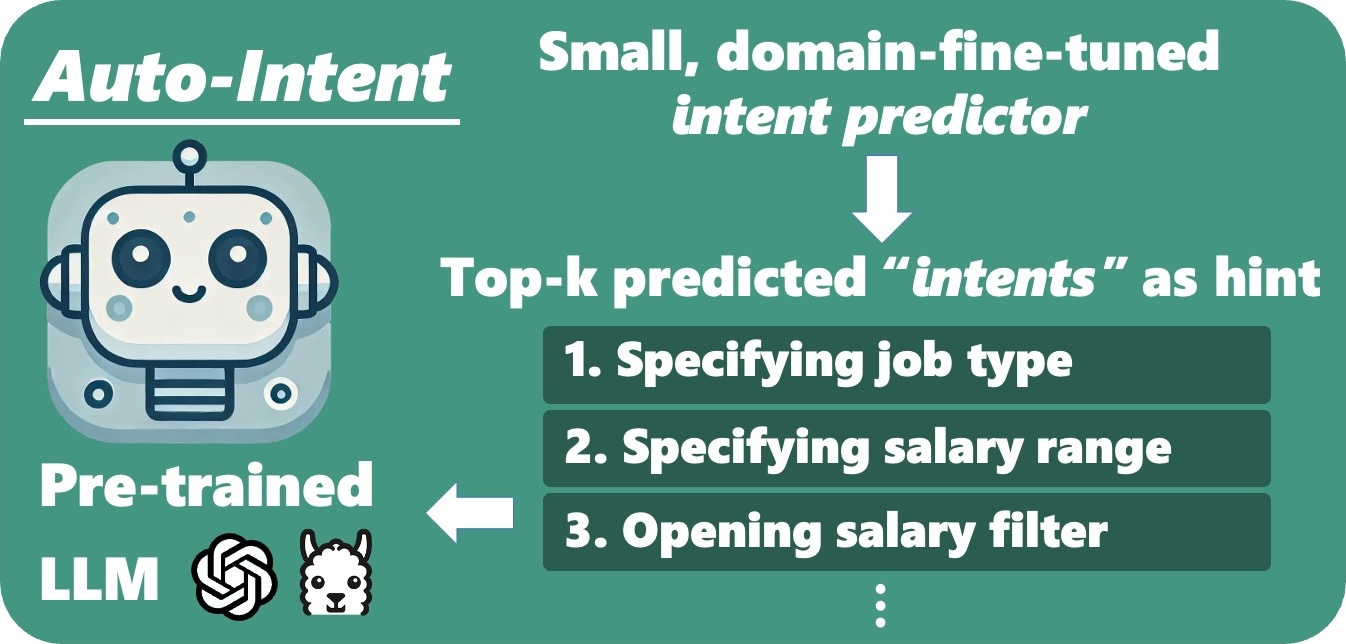

| Auto-Intent: Automated Intent Discovery and Self-Exploration for Large Language Model Web Agents Jaekyeom Kim, Dong-Ki Kim, Lajanugen Logeswaran, Sungryull Sohn, Honglak Lee EMNLP 2024 (Findings) [paper] [arxiv] [poster] |

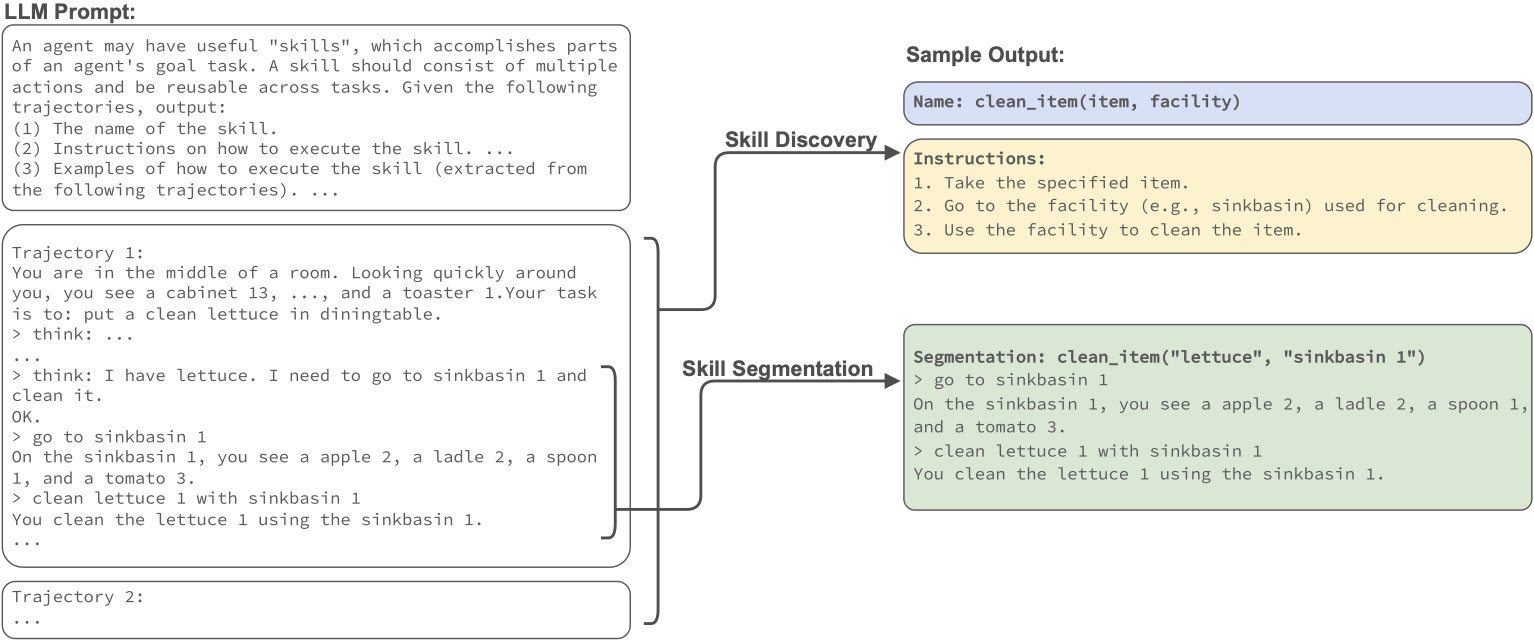

| SkillAct: Using Skill Abstractions Improves LLM Agents Anthony Z. Liu, Jongwook Choi, Sungryull Sohn, Yao Fu, Jaekyeom Kim, Dong-Ki Kim, Xinhe Wang, Jaewon Yoo, Honglak Lee ICML 2024 Workshop on LLMs and Cognition [paper] |

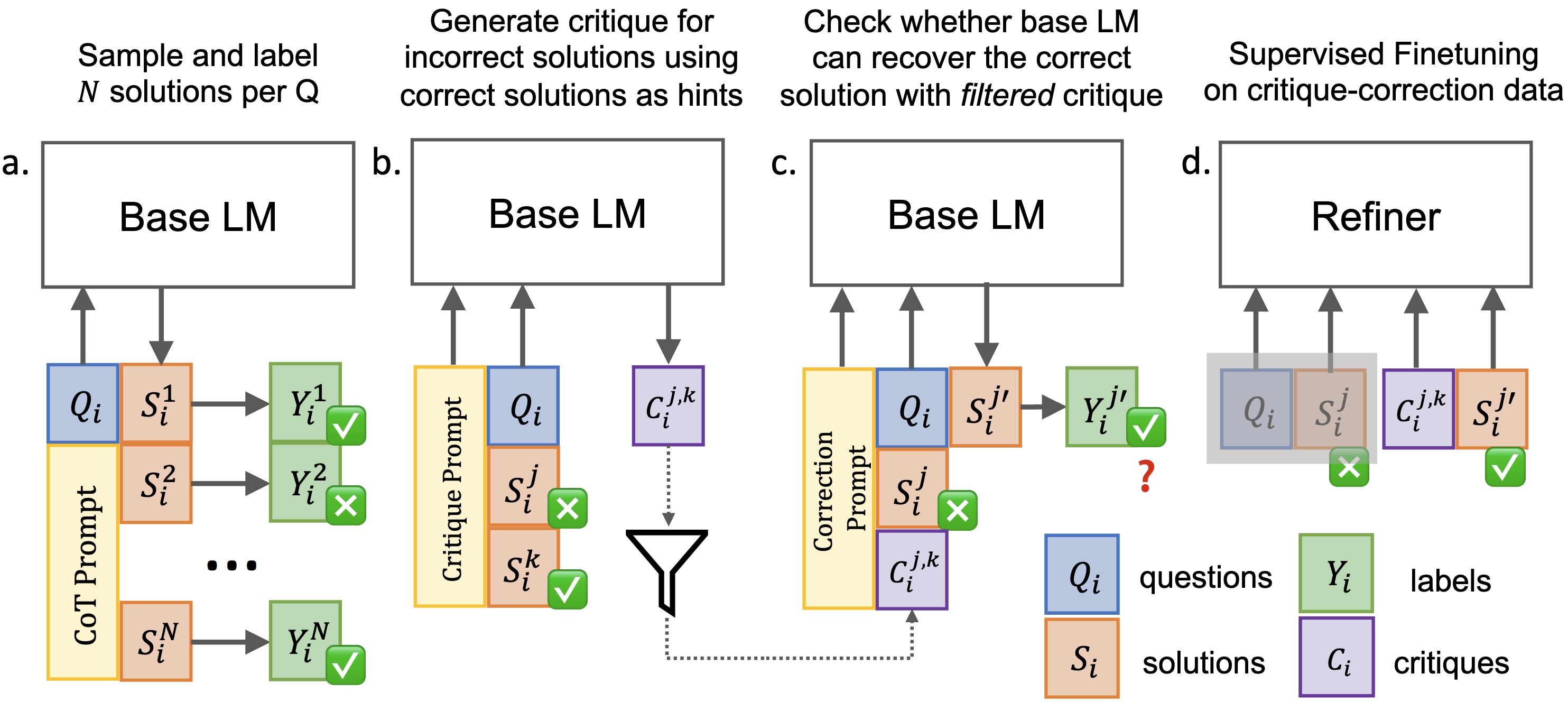

| Small Language Models Need Strong Verifiers to Self-Correct Reasoning Yunxiang Zhang, Muhammad Khalifa, Lajanugen Logeswaran, Jaekyeom Kim, Moontae Lee, Honglak Lee, Lu Wang ACL 2024 (Findings) [paper] [arxiv] |

Reinforcement Learning and Skill Discovery

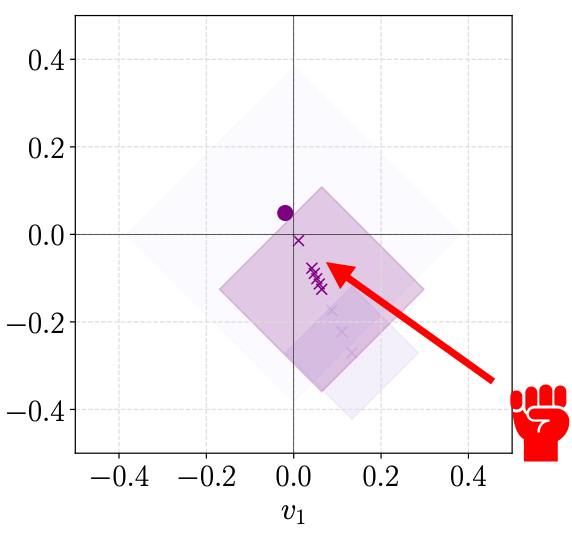

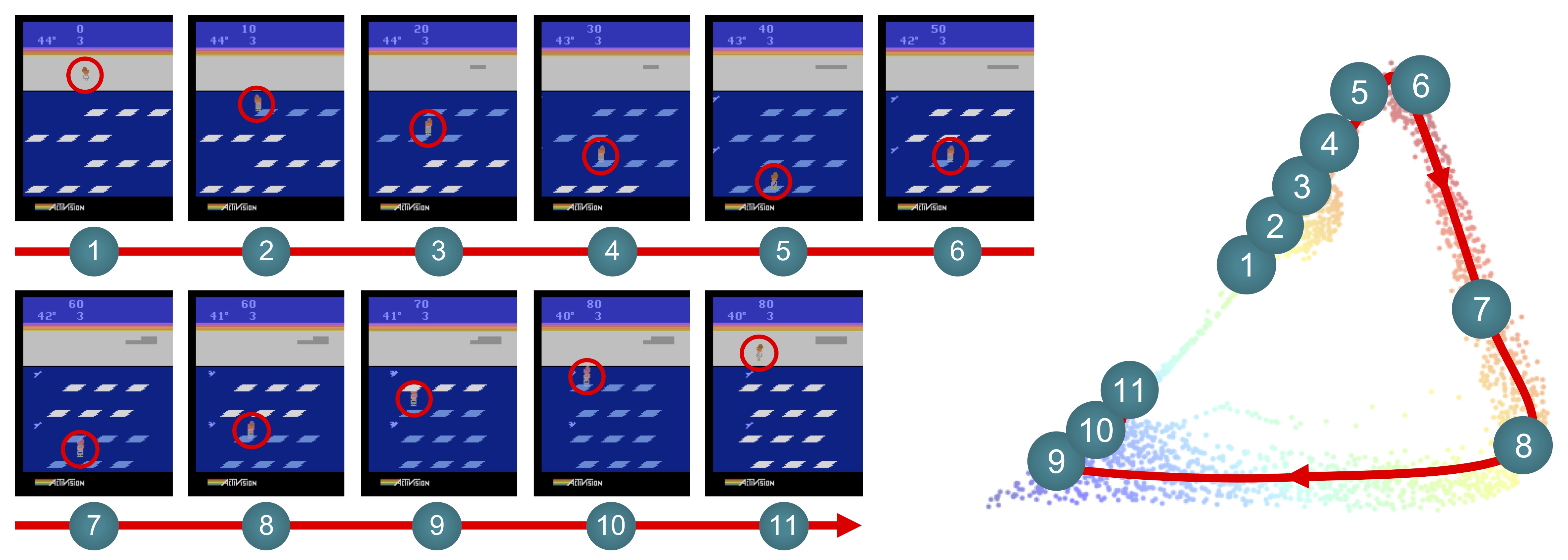

| Constrained GPI for Zero-Shot Transfer in Reinforcement Learning Jaekyeom Kim, Seohong Park, Gunhee Kim NeurIPS 2022 [paper] [arxiv] [talk] [code] |

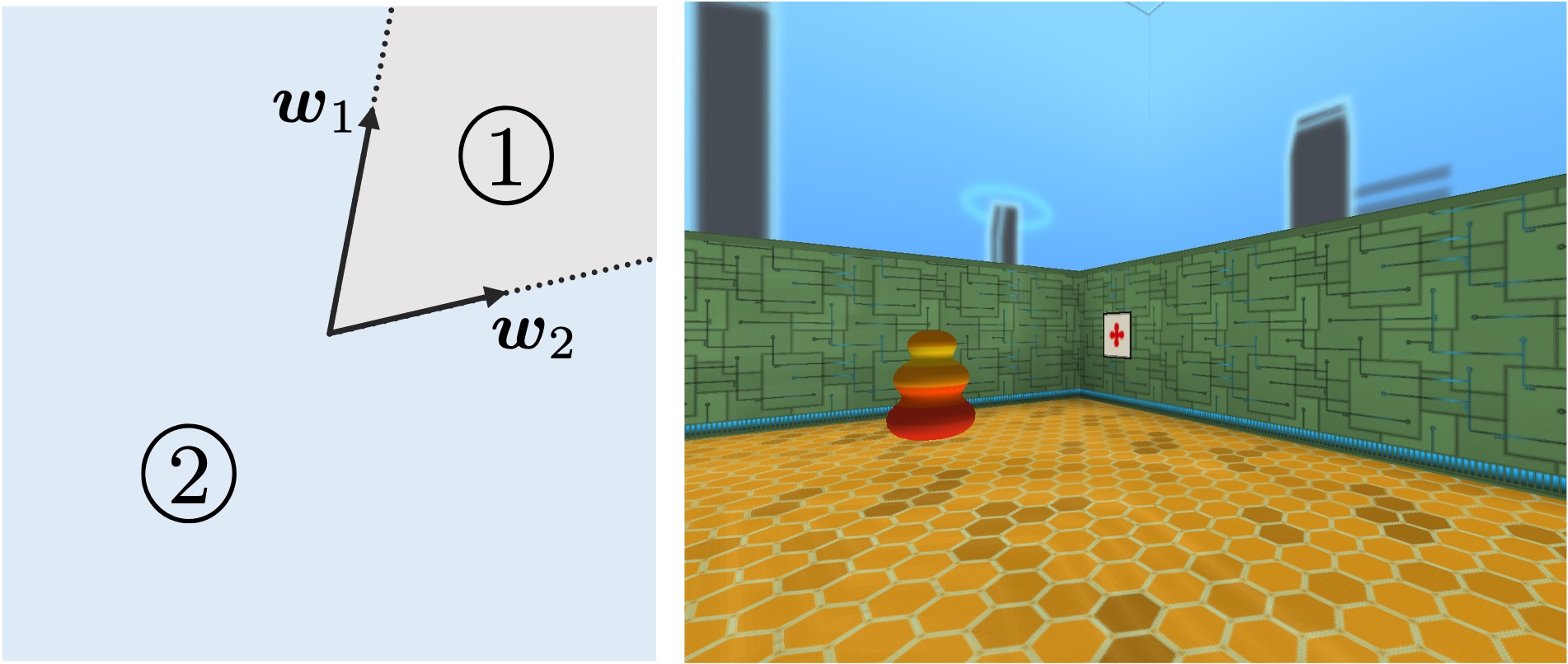

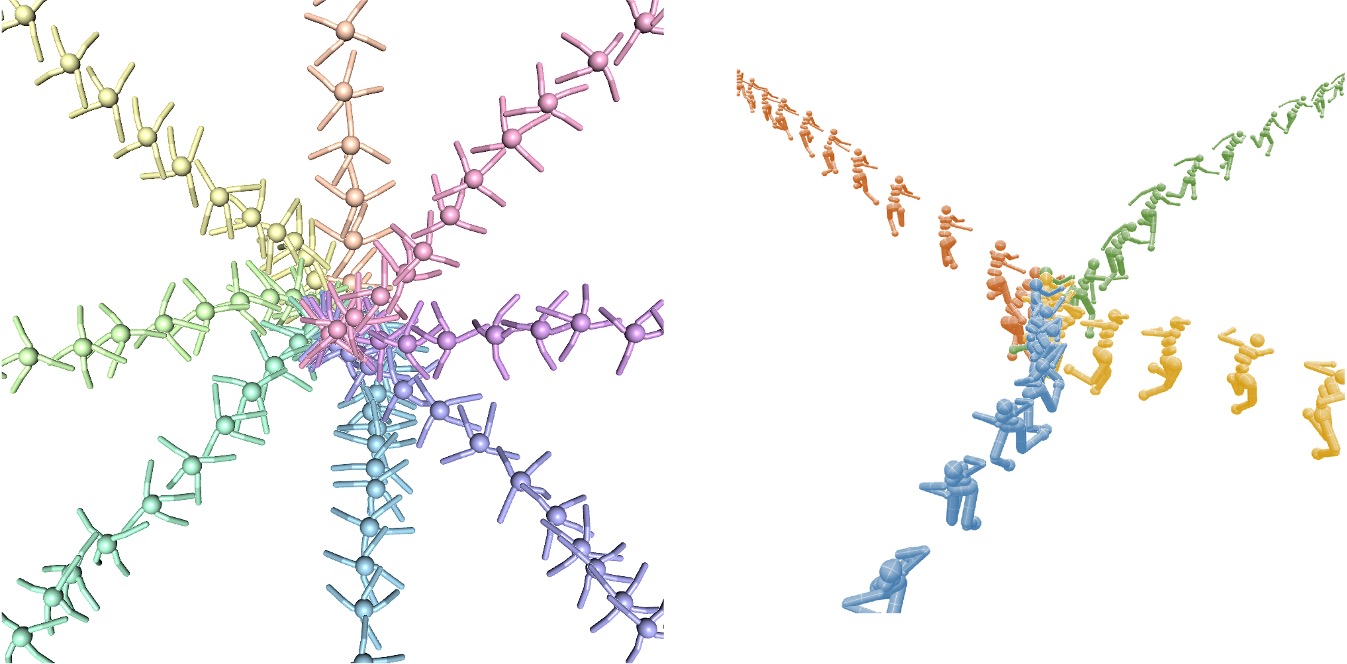

| Lipschitz-constrained Unsupervised Skill Discovery Seohong Park, Jongwook Choi*, Jaekyeom Kim*, Honglak Lee, Gunhee Kim ICLR 2022 [paper] [arxiv] [project] [code] |

| Time Discretization-Invariant Safe Action Repetition for Policy Gradient Methods Seohong Park, Jaekyeom Kim, Gunhee Kim NeurIPS 2021 [paper] [appx] [arxiv] [talk] [code] |

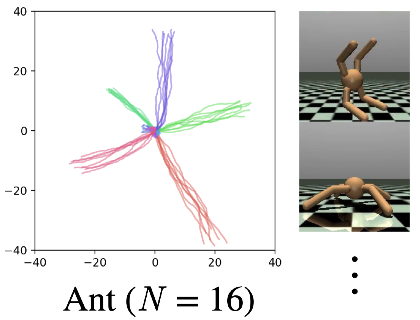

| Unsupervised Skill Discovery with Bottleneck Option Learning Jaekyeom Kim*, Seohong Park*, Gunhee Kim ICML 2021 [paper] [appx] [arxiv] [talk] [code] |

| EMI: Exploration with Mutual Information Hyoungseok Kim*, Jaekyeom Kim*, Yeonwoo Jeong, Sergey Levine, Hyun Oh Song ICML 2019 (Long talk: top ~4.6%) [paper] [supp] [arxiv] [talk] [code] |

Generalization and Robustness

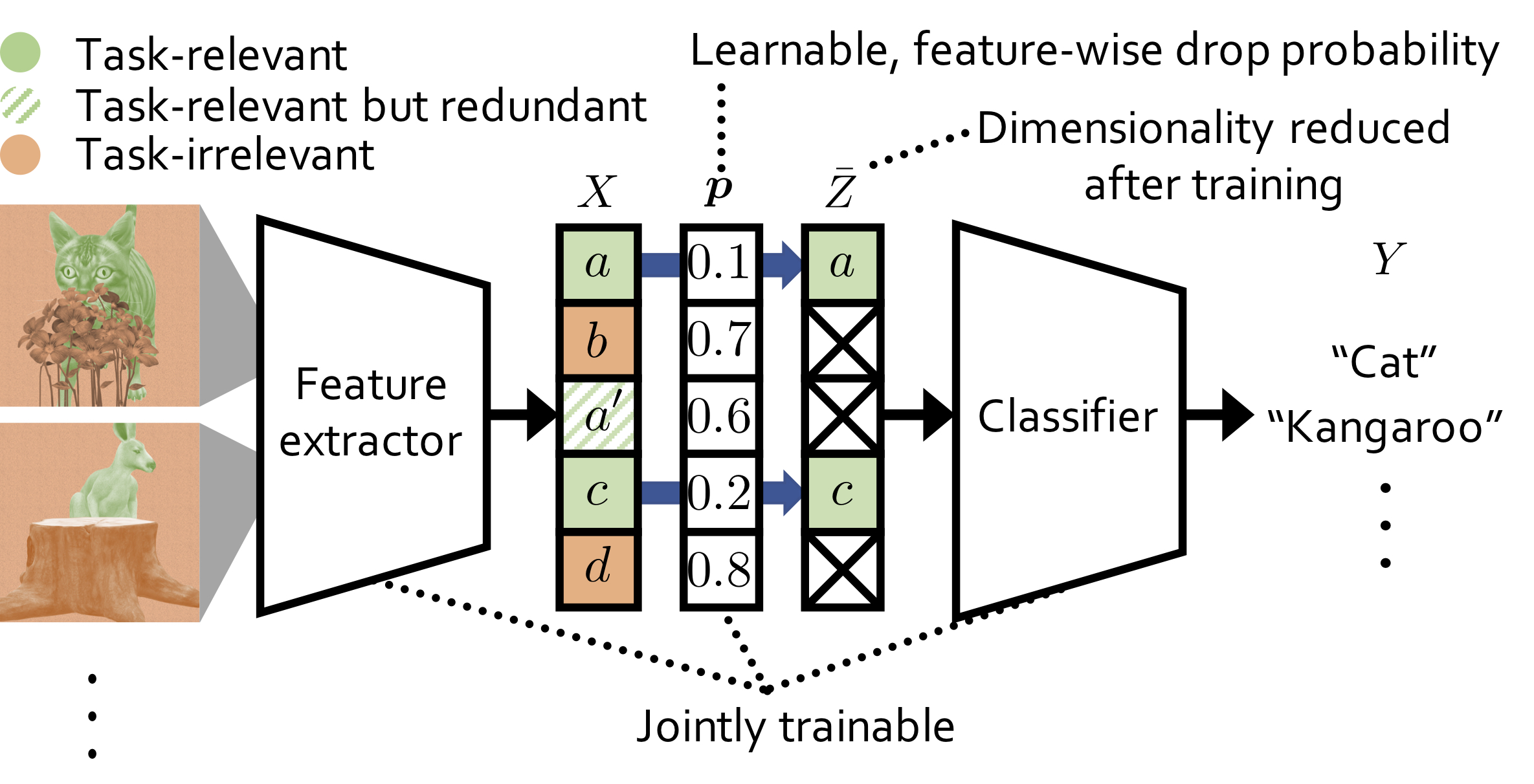

| Drop-Bottleneck: Learning Discrete Compressed Representation for Noise-Robust Exploration Jaekyeom Kim, Minjung Kim, Dongyeon Woo, Gunhee Kim ICLR 2021 [paper] [arxiv] [talk] [code] |

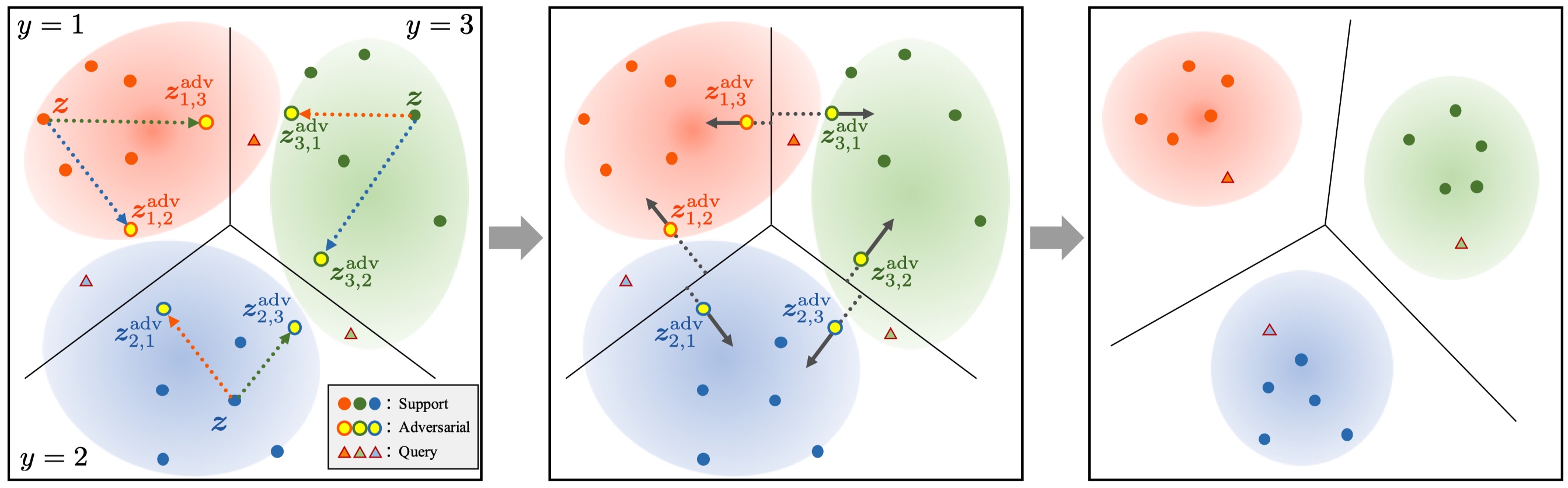

| Model-Agnostic Boundary-Adversarial Sampling for Test-Time Generalization in Few-Shot Learning Jaekyeom Kim, Hyoungseok Kim, Gunhee Kim ECCV 2020 (Oral: top ~2%) [paper] [appx] [talk] [code] |

Honors & Awards

- PhD Dissertation Award (Aug. 2023, Dept. of Computer Science and Engineering, Seoul National University)

- Star Student Researcher Award (Feb. 2023, BK21 Intelligence Computing, Seoul National University)

- Youlchon AI Star Fellowship (Jul. 2022, Youlchon Foundation)

- Naver PhD Fellowship (Dec. 2021, Naver)

- Google PhD Fellowship - Area: Machine Learning (Sep. 2021, Google)

- Samsung Humantech Paper Award - Silver Prize in Signal Processing (Feb. 2021, Samsung Electronics)

- Qualcomm Innovation Fellowship Korea (Dec. 2020, Qualcomm AI Research)

- On-Dream Outstanding Scholar Award (Dec. 2020, Hyundai Motor Chung Mong-Koo Foundation)

- On-Dream Future Talent Graduate Scholarship (Jul. 2020, Hyundai Motor Chung Mong-Koo Foundation)

- Kwanjeong Domestic Scholarship (Apr. 2018, Kwanjeong Educational Foundation)

- Summa Cum Laude Honor (Feb. 2018, Korea Advanced Institute of Science and Technology)

Education

- Seoul National University (SNU) (Mar. 2018 - Aug. 2023)

- PhD in Computer Science and Engineering

- Advisors: Prof. Gunhee Kim and Prof. Hyun Oh Song

- Korea Advanced Institute of Science and Technology (KAIST) (Feb. 2010 - Jun. 2017)

- BS in Computer Science

- Graduated summa cum laude